The original aim of this work was to be able to isolate the corners of a colour transition in a texture or image. Due to the fact this isnt sensitive enough and changes in direction are not always as clear as it first seems, it morphed into edge detection pretty quickly.

It then morphed into how can I then Isolate the pattern or edges from an image or texture, convert to vertices and then project onto a mesh.

Im only going to breifly go over the principles.

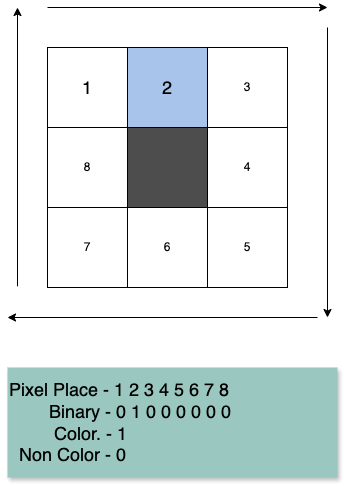

The first part is quite manual consider this:

The principle is simple coloured pixels are represented with 1’s and non coloured by 0’s.

We then go round the center of the pixel grid: left —> right / top —> bottom / right —> left / bottom —> top. Giving a 8 digit piece of binary.

We can store this binary in a dictionary of sorts. And when we sample a pixel of an image, we use the current pixel as the central black one above and reconstruct the binary based on colors and if it has colour or not for this primitive version.

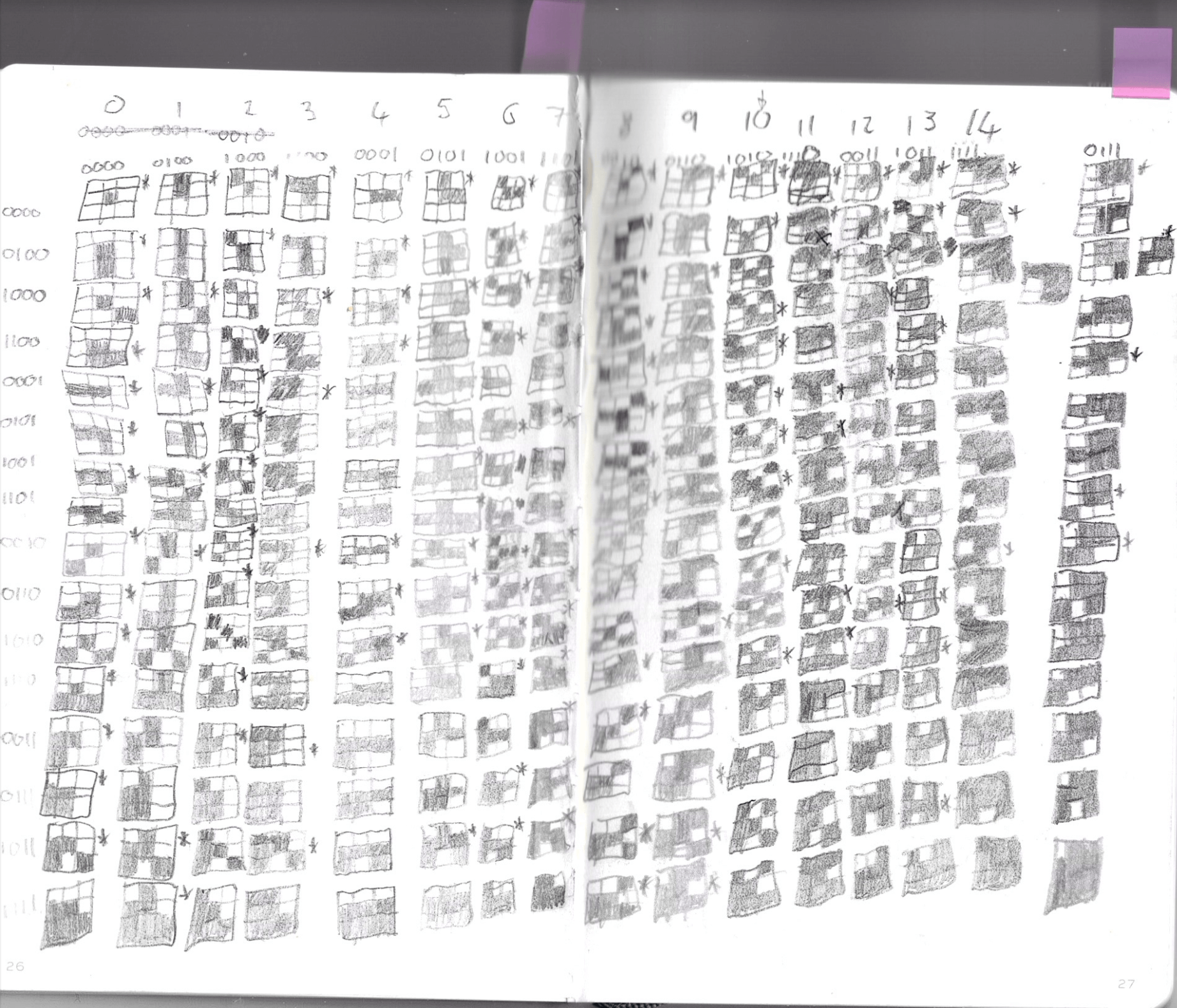

So if we know theres 9 possible binary options and we discount the middle theres 8 possible outcomes. So if we do 2^8 === 256 possible combinations, and now you understand the hero image, this shows how to do a badly drawn diagram of all possible outcomes of a pixel grid..

So we now have the map of whats an edge or change in direction.

We need sample data to do look ups in this map. If youd like to see the map i.e. object with values in, just contact me: [email protected]. The way we sample the image occurs below.

1function draw() {2 const canvas = document.getElementById('canvas1');3 canvas.width = 150;4 canvas.height = 150;56 canvas.style.width = '500px';7 canvas.style.height = '300px';8 const ctx = canvas.getContext('2d');9 ctx.scale(1, 1);1011 canvas.width = window.innerWidth;12 canvas.height = window.innerHeight;1314 const hexSize = 100;15 const hexWidth = Math.sqrt(3) * hexSize;16 const hexHeight = 2 * hexSize;1718 function drawHexagon(x, y, color) {19 ctx.beginPath();20 for (let i = 0; i < 6; i++) {21 const angle = Math.PI / 3 * i;22 const dx = hexSize * Math.cos(angle);23 const dy = hexSize * Math.sin(angle);24 if (i === 0) {25 ctx.moveTo(x + dx, y + dy);26 } else {27 ctx.lineTo(x + dx, y + dy);28 }29 }30 ctx.closePath();313233 ctx.stroke();34 ctx.fillStyle = '#ffffff'; // Fill color35 ctx.fill();36 }3738 function drawHexagonGrid() {39 const rows = Math.ceil(canvas.height / hexHeight) + 1;40 const cols = Math.ceil(canvas.width / hexWidth) + 1;4142 console.log({rows, cols})43 for (let row = 0; row < rows; row++) {44 for (let col = 0; col < cols; col++) {45 const x = col * hexWidth * 0.75; // Horizontal offset for hexagon spacing46 const y = row * hexHeight + (col % 2) * (hexHeight / 2); // Vertical offset for hexagon spacing47 drawHexagon(x, y);4849 }50 }51 }52 drawHexagonGrid()5354 function getPixelValue(imageData, x, y) {55 const index = (y * imageData.width + x) * 4; // Each pixel has 4 values (R, G, B, A)56 const red = imageData.data[index];57 const green = imageData.data[index + 1];58 const blue = imageData.data[index + 2];5960 // Check if the pixel is white (R=255, G=255, B=255)61 const isWhite = (red > 240 && green > 240 && blue > 240 );62 return isWhite ? '1' : '0'; // Return '0' for white, '1' for non-white63 }64 function calculateDistance(x1, y1, x2, y2) {65 return Math.sqrt((x2 - x1) ** 2 + (y2 - y1) ** 2);66 }6768 function filterChangesByDistance(changes, minDistance) {69 const filteredChanges = [];7071 changes.forEach((current, index) => {72 if (filteredChanges.length === 0) {73 filteredChanges.push(current);74 return;75 }7677 let isFarEnough = true;78 filteredChanges.forEach(existing => {79 if (calculateDistance(current.x, current.y, existing.x, existing.y) < minDistance) {80 isFarEnough = false;81 }82 });8384 if (isFarEnough) {85 filteredChanges.push(current);86 }87 });8889 return filteredChanges;90 }9192 function calculateDVariable(ctx, x, y) {93 const imageData = ctx.getImageData(0, 0, ctx.canvas.width, ctx.canvas.height);94 const surroundingPixels = [95 { dx: -1, dy: -1 }, // Top-left96 { dx: 0, dy: -1 }, // Top97 { dx: 1, dy: -1 }, // Top-right98 { dx: 1, dy: 0 }, // Right99 { dx: 1, dy: 1 }, // Bottom-right100 { dx: 0, dy: 1 }, // Bottom101 { dx: -1, dy: 1 }, // Bottom-left102 { dx: -1, dy: 0 } // Left103 ];104105 let d = '';106107 surroundingPixels.forEach(({ dx, dy }) => {108 const newX = x + dx;109 const newY = y + dy;110111 // Check if the new coordinates are within the canvas bounds112 if (newX >= 0 && newX < ctx.canvas.width && newY >= 0 && newY < ctx.canvas.height) {113 const pixelValue = getPixelValue(imageData, newX, newY);114 d += pixelValue; // Append the pixel value (0 or 1) to `d`115 } else {116 d += '0'; // If out of bounds, default to white (0)117 }118 });119120 // Debug: Log the 'd' variable and coordinates121 // console.log(`Pixel (${x}, ${y}): Surrounding pixels -> ${d}`);122123 return d;124 }125126 function calculateDForAllPixels(ctx) {127 const canvasWidth = 125;128 const canvasHeight = 125;129 const result = []; // To store the d value for each pixel130131 for (let y = 0; y < canvasHeight; y++) {132 for (let x = 0; x < canvasWidth; x++) {133 const d = calculateDVariable(ctx, x, y);134 result.push({x, y, d}); // Store the result with the pixel coordinates135 }136 }137138 return result;139 }140141 const result = calculateDForAllPixels(ctx);142143 const changesInDirection = result.filter((item) => data[item.d] === 0);144145 const filteredChanges = filterChangesByDistance(changesInDirection, 30);146147 const imageData = ctx.getImageData(0, 0, 125, 125);148 for (let k = 0; k < filteredChanges.length; k++) {149 // Define the coordinates150 const x = filteredChanges[k].x;151 const y = filteredChanges[k].y;152153 // Create an ImageData object to modify individual pixels154155 // Calculate the index of the pixel in the ImageData array156 const index = (y * imageData.width + x) * 4;157158 // Set the pixel to red (RGBA format)159 imageData.data[index] = 255; // Red160 imageData.data[index + 1] = imageData.data[index] === 255 ? 255 : 0; // Green161 imageData.data[index + 2] = imageData.data[index] === 255 ? 255 : 0; // Blue162 imageData.data[index + 3] = 255; // Alpha (255 is fully opaque)163164 // Update the canvas with the modified ImageData165 }166 ctx.putImageData(imageData, 0, 0);167}

Im not going to go through this in depth, but i will briefly describe it. We build a hexagon pattern (from chatgpt), we then sample each pixel and determine what color it is, based on this color we determine if its a 1 or 0. We do this for every pixel.

In Addition we ensure theres only 1 pixel piece of data per 20-60 pixels depending on how detailed you want the vertices to be that we will project onto the mesh.

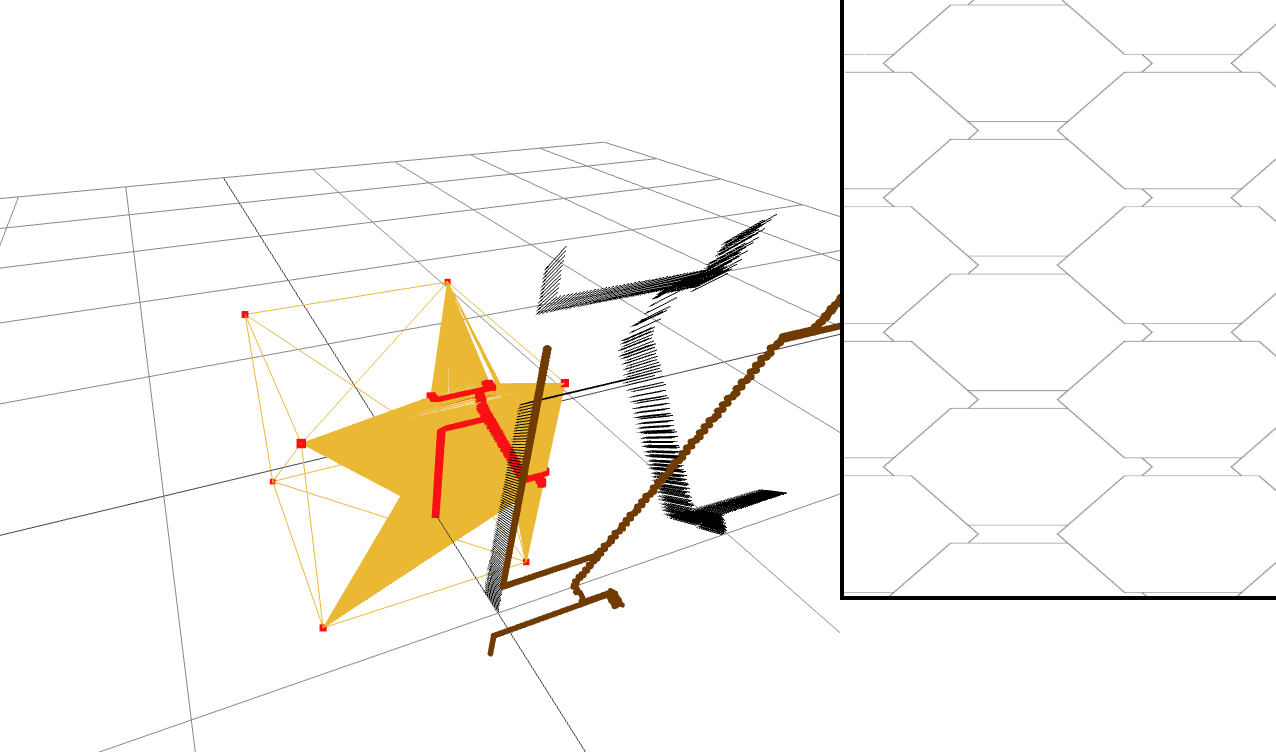

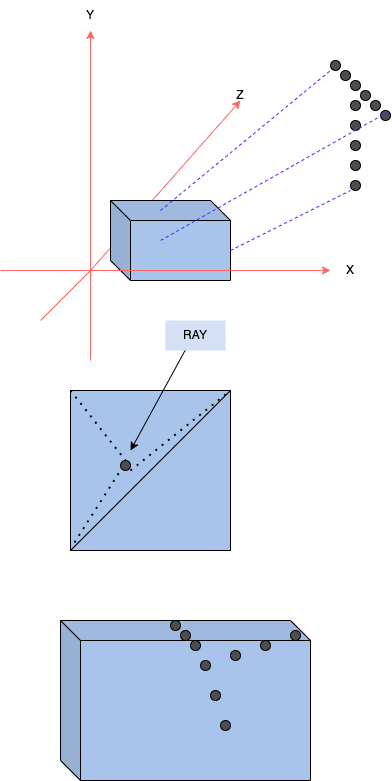

So now we have data for the vertices. So how on gods earth do we get these vertices onto a 3D mesh? Consider this:

So the idea is you place the data as points in 3D space some where near the mesh. With the proximity not too important but orientation is more so, i.e. it should face the mesh. And as its a 2d pattern its quite easy.

Once we have the points facing the mesh, we raycast from each 2D/3D pattern point towards the origin (if the mesh is centered here, or the origin of the bounding box of the mesh). The raycast can give us data as to which face was intersected.

Given the face where the intersection occured we can construct 3 new triangles from the old one.

This is highlighted in the 2nd image of the diagram above. Splitting one triangle into 3 new smaller ones.

The code for playing around with this is here:

1import React, { useState, useEffect, useRef } from 'react';2import { useThree } from '@react-three/fiber';3import { OrbitControls } from '@react-three/drei';4import { Sphere, Box } from '@react-three/drei';5import * as THREE from 'three';6import { data as coordinates } from '../../txt';78function filterArray(source, arr) {9 const result = [];10 for (let i = 0; i < arr.length; i += 3) {11 const index1 = arr[i];12 const index2 = arr[i + 1];13 const index3 = arr[i + 2];1415 if (16 source.includes(index1) &&17 source.includes(index2) &&18 source.includes(index3)19 ) {20 continue;21 }2223 result.push(index1, index2, index3);24 }25 return result;26 }2728function removeFaceAndAddNewFaces(geometry, faceIndex, intersectionPoint) {29 const positionAttribute = geometry.getAttribute('position');30 const index = geometry.getIndex();31 const oldVerticesCount = positionAttribute.count;32 const numVertices = geometry.attributes.position.array.length / 3;333435 const vertices = [];3637 for (let i = 0; i < oldVerticesCount; i++) {38 vertices.push(new THREE.Vector3().fromBufferAttribute(positionAttribute, i * 3));39 }4041 const intersectionFaceIndexA = faceIndex[0];42 const intersectionFaceIndexB = faceIndex[1];43 const intersectionFaceIndexC = faceIndex[2];4445 console.log({intersectionFaceIndexA, intersectionFaceIndexB, intersectionFaceIndexC})4647 const newVertex = intersectionPoint;4849 const newVertices = [50 newVertex.x, newVertex.y, newVertex.z,51 ]5253 const newAIndex = numVertices;5455 const newIndices = [56 intersectionFaceIndexA, newAIndex, intersectionFaceIndexB,57 intersectionFaceIndexB, newAIndex, intersectionFaceIndexC,58 intersectionFaceIndexC, newAIndex, intersectionFaceIndexA59 ]6061 const positions = geometry.attributes.position.array;6263 let indices = geometry.index.array;6465 geometry.setDrawRange(0, indices.length + newIndices.length);6667 const data = new Float32Array([...positions, ...newVertices]);6869 geometry.setAttribute(70 "position",71 new THREE.Float32BufferAttribute(72 data,73 374 )75 );7677 const filteredArrIndex = filterArray(78 [79 intersectionFaceIndexA,80 intersectionFaceIndexB,81 intersectionFaceIndexC,82 ],83 indices84 );8586 const dataIndex = new Uint32Array([...indices, ...newIndices]);8788 geometry.setIndex(89 new THREE.BufferAttribute(90 dataIndex,91 192 )93 );9495 geometry.verticesNeedUpdate = true;96 geometry.attributes.position.needsUpdate = true;97 geometry.index.needsUpdate = true;98 geometry.computeFaceNormals = true;99 geometry.computeVertexNormals();100 geometry.computeBoundingSphere();101 geometry.computeBoundingBox();102}103104const insertVertex = (vertex, geometry, pointsGeom, scene) => {105 const point = vertex;106107 const positionAttribute = geometry.getAttribute('position');108109 const origin = new THREE.Vector3(0, 0, 0);110 const rayDirection = new THREE.Vector3().subVectors(origin, point).normalize();111112 const ray = new THREE.Ray(point, rayDirection);113114 const arrowHelper = new THREE.ArrowHelper(rayDirection , point, 0.1, 0x000000);115116 scene.add(arrowHelper);117118 const index = geometry.getIndex();119 const vertices = positionAttribute.array;120 let intersectedFaces = [];121 const intersectionPoint = new THREE.Vector3();122123 if (index) {124 const indices = index.array;125 for (let i = 0; i < indices.length; i += 3) {126 const a = new THREE.Vector3().fromArray(vertices, indices[i] * 3);127 const b = new THREE.Vector3().fromArray(vertices, indices[i + 1] * 3);128 const c = new THREE.Vector3().fromArray(vertices, indices[i + 2] * 3);129130 const intersected = ray.intersectTriangle(a, b, c, false, intersectionPoint);131132 if (intersected) {133 intersectedFaces.push({134 faceIndex: [indices[i], indices[i + 1], indices[i + 2]],135 vertices: [a, b, c],136 intersectionPoint: intersectionPoint.clone()137 });138 }139 }140141142 if (intersectedFaces.length) {143 removeFaceAndAddNewFaces(geometry, intersectedFaces[0].faceIndex, intersectedFaces[0].intersectionPoint);144 removeFaceAndAddNewFaces(pointsGeom.current, intersectedFaces[0].faceIndex, intersectedFaces[0].intersectionPoint)145 console.log('Face split completed.');146 } else {147 console.log('No intersection with the face.');148 }149}150}151152function Spheres() {153 const [setData] = useState();154 const { scene } = useThree();155 const boxRef = useRef();156 const pointsGeom = useRef();157 const shapeRef = useRef();158159 useEffect(() => {160 const b = new THREE.BufferGeometry().setFromPoints(coordinates.map((item) => new THREE.Vector3(item.x , item.y, 1)));161162 const m = new THREE.Mesh(b, new THREE.MeshBasicMaterial())163164 m.rotateX(Math.PI)165166 const buff = m.geometry167168 const geometry = boxRef.current?.geometry;169 if (buff) {170 setData(buff)171 const vertices = buff.attributes.position.array;172173 for (let i = 0; i < vertices.length; i += 3) {174 const vector = new THREE.Vector3(vertices[i] / 100, vertices[i + 1] / 100, 1);175 insertVertex(vector, geometry, pointsGeom, scene)176 }177 }178 }, [])179180 return (181 <>182 <OrbitControls />183 <ambientLight intensity={0.5} />184 <pointLight position={[10, 10, 10]} />185 <gridHelper />186187 <group ref={shapeRef} rotation={[Math.PI, 0, 0]} position={[0, 1, 1]}>188 {coordinates.map((coord, index) => (189 <Sphere key={index} position={[coord.x / 100.0, coord.y / 100.0, 0]} args={[0.01, 32, 32]}>190 <meshStandardMaterial attach="material" color="orange" />191 </Sphere>192 ))}193 </group>194195 <Box args={[1,1,1]} ref={boxRef}>196 <meshBasicMaterial attach="material" color="orange" wireframe/>197 </Box>198199 <points scale={[1,1,1]}>200 <boxGeometry ref={pointsGeom} />201 <pointsMaterial size={0.04} color={"red"} />202 </points>203 </>204 );205}206207export default Spheres;

And this is a whistle stop tour of making textures into 3D data or vertices on a 3D mesh. If you want to discuss send me a message [email protected].