This is a very quick post explaining how we can use a flood fill algorithm to fill a opacity mask image, which is placed on the surface of a custom mesh via a decal.

The premise is we render the scene to a rendertarget and then ping pong this to allow for feedback, ie. the end result is then again passed to the front.

Below is the code sandbox with some links I found useful at the top of the codesandbox.

It is a port from this shadertoy. Which uses buffers and a flood fill algorithm.

Principles

The bufferA in shadertoy represents an offscreen render and target in threejs, ie you would create a plane, a seperate scene and a orthographic camera so the plane acts as a full screen quad. So theres nothing else rendered but the plane when the orthographic camera is looking down on the plane.

A buffer can also refer to a texture, it depends what corner of graphics you come from as to what is used more. And when shadertoy calls a tab a buffer it really means rendering this code to a texture/buffer and passing to the mainImage or another buffer.

So how can we render this offscreen buffer to pass to the on screen material and also feed the end result back to the starting texture.

Well we need useframe and the ping pong method which sets the end result to be the starting texture.

1// Create an orthographic camera2const cameraOrtho = new THREE.OrthographicCamera(-1, 1, 1, -1, 0, 1);34const plane = new THREE.PlaneGeometry(2, 2);5let bufferObject = new THREE.Mesh(plane, bufferMaterial);6bufferScene.add(bufferObject);7// In the render loop8useFrame(({ clock, gl, camera: camA }) => {9 const time = clock.elapsedTime;1011 gl.setRenderTarget(textureB);12 gl.clear();13 gl.render(bufferScene, cameraOrtho);1415 //Swap textureA and B16 var t = textureA;17 textureA = textureB;18 textureB = t;1920 matRef.current.map = textureA.texture;21 bufferMaterial.uniforms.sourceTexture.value = branchWalking;2223 bufferMaterial.uniforms.bufferTexture.value = textureA.texture;24 bufferMaterial.uniforms.iResolution.value = new THREE.Vector2(25 branchWalking.image.width,26 branchWalking.image.height27 );28 bufferMaterial.uniforms.iTime.value = time;29 bufferMaterial.uniforms.iMouse.value = mouseUV;3031 bufferMaterial.uniforms.clicked.value = clicked;3233 gl.setRenderTarget(null);34 gl.clear();3536 gl.render(scene, camera);37});

As you can see we setup the offscreen quad like plane and buffer material.

1gl.setRenderTarget(textureB);2gl.clear();3gl.render(bufferScene, cameraOrtho);45//Swap textureA and B6var t = textureA;7textureA = textureB;8textureB = t;

This is the key bit where we render the offscreen buffer or scene which has the plane and orthographic camera and then switch textureA and textureB, A being the start and B being the end.

The source and buffer textures correspond to iChannel0 and 1.

The resoultion is the resolution of the source image not the window.

The Shader

The vertex shader is standard and nothing special is going on there.

The fragment shader is where all the magic happens and the growth occurs.

Just to be clear the below code is mainly not mine, ie. I have only ported it and modified some of the code, using tutorials and resources online.

1#define COLOR_TOLERANCE .91252#define CLICK_TOLERANCE .010345uniform sampler2D sourceTexture;6uniform sampler2D bufferTexture;7uniform vec2 iMouse;8varying vec2 vUv;9uniform vec2 iResolution;10uniform float iTime;11uniform bool clicked;1213${noise}1415void main() {16 // Thanks to: https://www.shadertoy.com/view/ldjSzd17 // For explaining how the mouse coordinates work in ShaderToy.18 bool is_mouse_down = !(clicked);19 vec2 mouse_coords = iMouse.xy;2021 if (is_mouse_down || iTime <= 0.125) {22 // Just update the buffer with a new copy of the source frame.23 gl_FragColor = texture(sourceTexture, vUv);24 gl_FragColor.w = 1.0;25 } else {26 // Flood fill.27 float noise = snoise(vec3(vUv, 1.0) * 55.0 + iTime * 3.0);2829 vec4 thispixel = texture(bufferTexture, vUv);30 vec4 clickpixel = texture(bufferTexture, iMouse.xy);31 vec4 north = texture(bufferTexture, vUv + vec2( 0.0, 1.0) * 1.0 / iResolution);32 vec4 south = texture(bufferTexture, vUv + vec2( 0.0, -1.0) * 1.0 / iResolution);33 vec4 east = texture(bufferTexture, vUv + vec2( 1.0, 0.0) * 1.0 / iResolution);34 vec4 west = texture(bufferTexture, vUv + vec2(-1.0, 0.0) * 1.0 / iResolution);3536 gl_FragColor = texture(bufferTexture, vUv);37 gl_FragColor.w = 1.0;38 if (distance(vUv.xy, mouse_coords) < CLICK_TOLERANCE) {39 // Initial pixel of the flood fill.40 // All filled pixels have alpha=0.41 gl_FragColor.r = 1.0;42 gl_FragColor.b = .0;43 gl_FragColor.g = .0;44 gl_FragColor.a = 1.0;45 }else if (46 (north.r >= 1.0 ||47 south.r >=1.0 ||48 west.r >=1.0 ||49 east.r >=1.0 )50 ) {51 // This pixel is neighbor to a filled pixel.52 // Should this one be filled as well?53 if (distance(thispixel.xyz * 1.0 / noise * 0.1, clickpixel.xyz) < COLOR_TOLERANCE ) {54 gl_FragColor.r = 1.0 ;55 }56 }57 }58}

Step by step we will go through this.

The first part ensures firstly we render the initial source to the buffer.

1if (is_mouse_down || iTime <= 0.125) {2 // Just update the buffer with a new copy of the source frame.3 gl_FragColor = texture(sourceTexture, vUv);4 gl_FragColor.w = 1.0;

If we have clicked or gone past the initial frames rendering then we enter the flood fill part of the shader.

1// Flood fill.2float noise = snoise(vec3(vUv, 1.0) * 55.0 + iTime * 3.0);34vec4 thispixel = texture(bufferTexture, vUv);5vec4 clickpixel = texture(bufferTexture, iMouse.xy);6vec4 north = texture(bufferTexture, vUv + vec2( 0.0, 1.0) * 1.0 / iResolution);7vec4 south = texture(bufferTexture, vUv + vec2( 0.0, -1.0) * 1.0 / iResolution);8vec4 east = texture(bufferTexture, vUv + vec2( 1.0, 0.0) * 1.0 / iResolution);9vec4 west = texture(bufferTexture, vUv + vec2(-1.0, 0.0) * 1.0 / iResolution);1011gl_FragColor = texture(bufferTexture, vUv);12gl_FragColor.w = 1.0;

This highlights how we grab the pixels around the current one, this is used to check if the surrounding pixels are red.

1gl_FragColor = texture(bufferTexture, vUv);2gl_FragColor.w = 1.0;3if (distance(vUv.xy, mouse_coords) < CLICK_TOLERANCE) {4 // Initial pixel of the flood fill.5 // All filled pixels have alpha=0.6 gl_FragColor.r = 1.0;7 gl_FragColor.b = .0;8 gl_FragColor.g = .0;9 gl_FragColor.a = 1.0;

The first condition which we need to check is, if the point where we clicked is close to the current uvs, essentially is this distance < 0.1, or what ever the click tolerance is. If it is we set the color to be red and then the frame of every pixel gets rendered and fed back to the start of the pipeline.

So we have some initial color now!

We need to basically check if the surrounding pixels are red.

1else if (2 (north.r >= 1.0 ||3 south.r >=1.0 ||4 west.r >=1.0 ||5 east.r >=1.0 )6) {7 // This pixel is neighbor to a filled pixel.8 // Should this one be filled as well?9 if (distance(thispixel.xyz * 1.0 / noise * 0.1, clickpixel.xyz) < COLOR_TOLERANCE ) {10 gl_FragColor.r = 1.0 ;11 }12}

Each pixel north, south, east and west of the current pixel is checked if it red, if it is red then we have one final check to check how similar the colors are as we only want similar colors to fill and not everything onscreen.

1// This pixel is neighbor to a filled pixel.2// Should this one be filled as well?3if (distance(thispixel.xyz * 1.0 / noise * 0.1, clickpixel.xyz) < COLOR_TOLERANCE ) {4 gl_FragColor.r = 1.0 ;5}

Have a play with the color tolerance and see what happens.

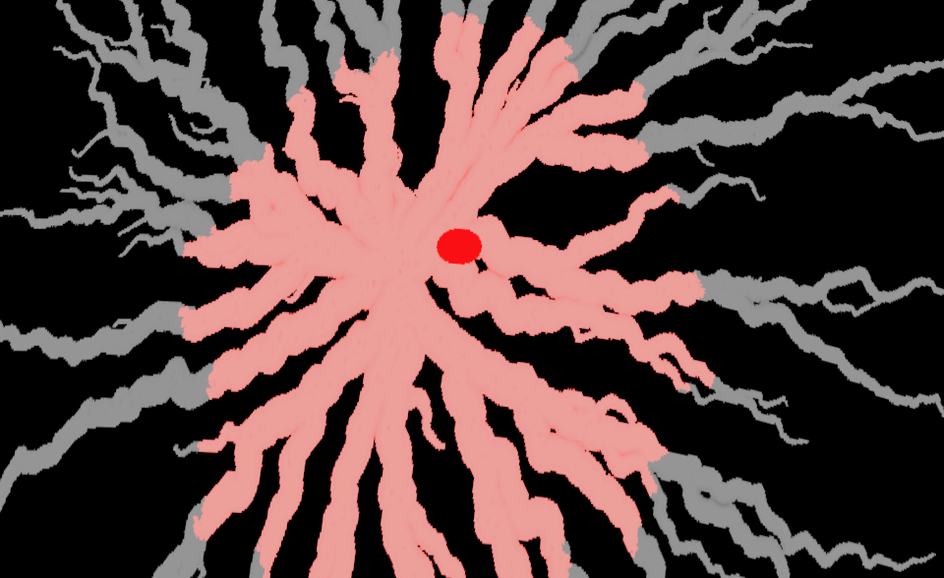

The actual base image

This was created inside of blender using shortest path geometry nodes and then rendering out the resulting geometry from a top down camera.

The shortest path in geometry works such that, the starting positions for the shortest path are all random and the center is basically the end point. The final thing is to shorten the radius for the shortest path as you go further from the origin.

I will do a write up on this geometry node setup, it can be incredibly useful for things like roots of trees.